If you landed on this page by accident (i.e., it appears somewhat out of context), I would suggest first reading my description on the spiral arm → cosmic ray → climate link, and the cosmic ray flux signature in the iron meteorites.

General Remarks on Jahnke's critique

The manuscript by Jahnke (which was not accepted for publication by A&A) is an attempt to repeat my previous analyses (e.g., PRL and New Astronomy papers linked from here). Although Jahnke raises a few interesting aspects, his analysis suffers from several acute problems, because of which he obtains his negative result, that is, that there is no statistically significant periodicity in the data and that there is no evidence for cosmic ray flux variability.By far, the most notable problem is that Jahnke's analysis does not consider the measurement errors. In his analysis, poorly dated meteorites were given the same weight as those with better exposure age determinations. As I show below, this has a grave effect on the signal to noise ratio (S/N) and consequently, on the statistical significance of any result he obtains.

I begin by summarizing the few benign points considered by Jahnke. Then I describe at length the main faults in his analysis, and follow by carrying out a more suitable statistical analysis based on the Rayleigh spectrum method and show that a periodic signal of 145 Myr is present in the data, at a statistically significant confidence level, even if one considers the more stringent grouping chosen by Jahnke.

In the appendix I describe at length why the statistical tool I employ here is better than that used by Jahnke (at least for the type of signals we are looking for in the data) or in fact by myself in the previous analyses. Moreover, the calculation described in the appendix quantifies the S/N degradation brought about by considering poorly dated meteorites, as Jahnke did. This quantitatively shows why his analysis could not have obtained any signal at a statistically significant level.

Simply said, the Jahnke's analysis literally introduces more noise than it does a signal and its null result is basically meaningless.

Detailed Comments

Some benign remarks

- Since I am not a meteoriticist, I have no way of judging whether all iron meteorites of a single classification group (according to the old or the recent classification) originated from the same parent body, nor whether they broke off at the same event. Thus, it is better in this respect to be more conservative. That is, as Jahnke points out, once recent claims that several iron groups should be grouped together were published, whether they are correct or not, we should consider the more conservative point of view to reduce the possibility that clustering is the result of single events producing multiple meteorites. Nevertheless, from the fact that we have some cases where the exposure ages of many meteorites span a long time range (a few 108yr), it is clear that at least some meteorites of the same iron group classification do not originate from the same meteoritic break up event.

- As Jahnke points out, the K-S analysis does indeed have a bias, with a higher sensitivity in the center. Such that choosing a phase insensitive statistic is not a bad idea at all. However, as I show below, the analysis done by Jahnke is actually a degradation of the analysis previously done by myself for other reasons. Moreover, the insinuation that I tuned the phase of the K-S to artificially get a better significance has no place. The phase used in the K-S is the phase of the data obtained if the zero-point for the folding is today. That is, there was no tuning in this respect and it should have been checked by Jahnke before making these insinuations.

Critical Points

- The main difference between the analyses of Jahnke and my previous analysis, is not any of those mentioned by Jahnke. Instead, the main difference is that Jahnke considered meteorites which have very poor error determination. As I show in the appendix, this considerably degrades the signal to noise ratio (S/N). This can be easily seen. If one adds a meteorite which is not expected to contribute any signal (because its phase in the periodicity is effectively unknown due to the error), then the noise is increased without increasing the signal, thereby decreasing the S/N. This will in turn degrade the statistical significance of any positive result. For example, it degrades a signal significant at the 99% level to the notably less significant 90% level. In my previous analysis, I simply discarded meteorites with a quoted error of 100 Myr or more (which actually corresponds to about 70 Myr or more, since Vosage & Feldman overestimated their errors, as can be seen once the potassium ages are compared with other exposure ages). In the present analysis, I simply weigh them according to their expected contribution to the signal.

- Although the Hierarchical clustering method I employed in the previous analysis is not ideal, it has one major advantage over the "up" or "down" methods devised by Jahnke. That is, Jahnke's method introduces a systematic error in the age of the clustered meteorites. This can be seen by comparing the meteor "clusters" which were assigned different ages according to the direction of the clustering. In all these instances of a different assigned age, the "up" clustering gave an exposure ages which was typically 40 Myr higher than in the "down" case. Clearly, it is unacceptable to have a 1/6 of the meteorites with such a large systematic error. That is to say, the hierarchical method is not ideal, but the modification suggested by Jahnke is even worse. The systematic errors will degrade the signal.

Instead of degrading the Hierarchical method as Jahnke did, I introduce below a method which does not suffer from the above problems which arise when clustering the meteorites, though it does consider the possibility that meteorites of the same iron group could arise from the same breakup event. The method also has the advantage that it can straightforwardly be expanded to analyze the error distribution, and independently show that the error include the same 145 Myr cycle.

Re-analysis using the Rayleigh Periodogram

In his analysis, Jahnke introduced several modifications (whether legitimate, such as being more conservative with the meteoritic grouping, or illegitimate, such as carelessly using poorly dated meteorites) which reduced the S/N. Thus, it is worthwhile to see whether another statistical method exists which is better suited to answer the question of whether the clustering is real or not.The method described in this section is based on the Rayleigh periodogram analysis. It has various advantages and disadvantages relative to the K-S, and its Kuiper statistic cousin.

I begin by describing the method. I then extend it to the analysis of the distribution of errors, and describe its statistically significant results for a 145 Myr signal in the meteoritic data. In the appendix, I compare the method to the Kuiper statistic and show that at least for the type of signals we expect to see in the data, it is a much stronger tool.

The Rayleigh Analysis

The Rayleigh analysis (RA) method is a statistical tool useful for establishing periodic deviations from the uniform occurrence of discrete events. As shown in the appendix, the RA method is better suited for this type of analysis than the Kolmogorov-Smirnov or its Kuiper deviate. This is because the statistical significance obtained for sinusoidal deviations, which are of the kind we are searching here, is notably higher than the significance obtained in the K-S or Kuiper statistic. This is not to say that the RA is better in general. There are many cases where K-S and Kuiper statistics are better suited. For example, they are more appropriate when analyzing deviations from a given nonuniform distribution or finding non-periodic deviations.The essence of the RA method is finding statistical deviations from a 2D random walk generated by the set of random events. For comparison, the K-S and Kuiper statistics rely on deviations between the observed cumulative distribution of the random events and that of the given distribution. In essence, they are 1D random walks with some constraints.

More specifically, for each period tested by the RA method, the discrete events are assigned phases corresponding to their occurrence within the given period

. A 2D random walk can then be constructed based on the phases, where a step is taken in the direction of

. A 2D random walk can then be constructed based on the phases, where a step is taken in the direction of  where

where  and

and  are the two directions of the random walk, and the phase is

are the two directions of the random walk, and the phase is  . This walk essentially addresses the question of whether the phases are uniformly distributed or whether concentrated around a certain preferred phase.

. This walk essentially addresses the question of whether the phases are uniformly distributed or whether concentrated around a certain preferred phase. If, for example, the events are assigned constant weights, the sum of the walk can be described by the vector

|

, then the walk should be a random walk. The vector sum R(p) should be 0 on average, and the probability that the power PR≡ R(p)2, also called the Rayleigh power, will be larger than a value a is given by (e.g., Bai, ApJ, 397:584, 1992):

, then the walk should be a random walk. The vector sum R(p) should be 0 on average, and the probability that the power PR≡ R(p)2, also called the Rayleigh power, will be larger than a value a is given by (e.g., Bai, ApJ, 397:584, 1992):

|

Note that this probability is for a given frequency. If we are studying a range of frequencies, then we should increase the probability according to the effective number of frequencies we have. For example, with a 1/1000 Myr-1 resolution (because the data spans about 1000 Myr), we have ~6 independent frequencies between 100 and 250 Myr (the range taken by Jahnke), each one could randomly yield a large value.

The analysis is more complicated because of two important reasons irrespective of the actual method employed (whether K-S, Kuiper or Rayleigh).

First, the meteorites do not have the same weight. Some meteorites, for example, are poorly dated. If they have an error larger than the half the period, then their phase in the Rayleigh analysis will be totally random. Clearly, such "measurements" will only add noise but no real signal, thereby decreasing the SNR (as was done by Jahnke).

Second, meteorites of the same Iron group classification and similar ages are most likely products of the same parent objects which crumbled in the same event (or related events). This implies that not all clustering should be attributed to cosmic ray flux variations.

We shall now discuss both.

Effect of meteoritic age error

If a meteor is poorly dated, it is less likely to appear in the right phase to give the right signal (irrespective of the analysis). In the limit of a large error, it will contribute no signal but will increase the noise. It is therefore wise not to attribute the same weight to all meteorites.In the Rayleigh analysis, a gaussian distribution for the phases implies that a meteorite is only expected to contribute with a reduced weight of

. This is obtained after integrating the sine and cosine functions with a gaussian distribution. Thus, a more appropriate form for the power PR(p) is actually:

. This is obtained after integrating the sine and cosine functions with a gaussian distribution. Thus, a more appropriate form for the power PR(p) is actually:

![$$ P_R(p) = {1 \over \sum_i w_i} \left[ \left( \sum_i \cos \left( 2 \pi t_i \over p\right) w_i \right)^2 + \left( \sum_i \sin \left( 2 \pi t_i \over p \right) w_i \right)^2 \right].

$$](http://www.sciencebits.com//files/tex/0c584706b39e18e1e7c6a1c8c97d0c4bff2f01d7.png) |

Note that the errors quoted by Vosage et al., are larger than the actual errors. This can be seen if the Potassium age determinations are compared with the other independent exposure age methods (such as using 10Be). Once this is done using the data of Lavielle et al. (EPSL, 170:93, 1999), while remembering the systematic correction necessary for the methods employing short lived isotopes, one obtains that the typical error between the Potassium age determinations and the other methods is about 70% of the quoted error of the Vosage et al. data (The error contribution from the other methods is expected to be small according to the quoted errors they have).

Meteoritic multiplicity

As mentioned numerous times before, some of the clustering could be due to the breakup of a single parent body in one or a few related events. To avoid this problem, it was first suggested in my first analysis to cleanup the data by merging together meteorites of the same iron group classification. As pointed out by Jahnke, this does not come without its problems. Moreover, the cleanup method suggested by Jahnke has even worse problem, as it introduces an unacceptable systematic error in the ages.The Rayleigh periodogram offers a straightforward extension which does not introduce the systematic errors or the ambiguity of knowing to which cluster a meteorite should be clustered.

Instead of clustering the meteorites together, they can be analyzed separately, with the following two modifications.

First, since meteorites with the same Iron group classifications and a small age separation (e.g., < 100 Myr) are likely to be related, they should be considered with a total weight of one meteorite (that is, their weight can be reduced by a factor of the number of members in the group). In some cases, there are many meteorites of the same classification which span a longer range. For example, there are 18 IIIAB group meteorites in a 290 Myr range. Clearly, they don't all arise from the same event, nor are they 18 independent break up events. Thus, it is reasonable to reduce the weight of each meteorites by a factor of 18 / (290Myr/100Myr).

Using this modification, we don't increase the statistical weight of a single breakup cluster, nor do we add systematic errors by accidentally merging wrong meteorites. However, the statistical analysis is more complicated and we cannot use the above equation for Prob(PR>a). We will use instead a Monte Carlo simulation.

The modified Rayleigh periodogram and the related Errorgram

Following the above points, I carried out a modified Rayleigh analysis, with a variable weight (assigning a weight according to the error, and then limiting the total weight for each cluster, based on the recent, more stringent, Iron classification).To estimate the statistical significance, I did a Monte Carlo simulation. Meteoritic break up events were randomly chosen between 100 and 900 Myr, and then smeared with a 200 Myr gaussian distribution to avoid edge effects. Each meteorite was assigned an error from those actually measured. A group of meteorites was simulated as a number of breakup events according to the width of the distribution and calculated as below. The ages of meteorites comprising each breakup event are chosen as the breakup event plus a random error realized using the assigned error of the given meteorite.

The actual number of events a group comprises is calculated as above: total range/100 Myr. If the number is smaller than 1, then 1 group is taken. If larger than one, the number of events is taken as the truncated integer of the obtained number with a probability of one minus the faction obtained. In the other cases, the higher integer is taken as the number of groups. (For example, in the example mentioned above, the 2.9 groups were chosen as 3 events in 90% of the time, and 2 events in 10% of the time).

The Rayleigh periodogram and the variable error permit yet another analysis. Because the errors are not fixed, they too can independently contain a clustering signal.

We define an error vector E as

with:

with:

|

|

.

.Clearly, if there is no real clustering in the data, then the errors

are not expected to be correlated with ti, in which case

are not expected to be correlated with ti, in which case  , and E1→ 0 and similarly E2→ 0, even if there is a notably large R. In other words, if a large R is obtained as a statistical fluke, there is no reason a priori for a large E to arise, since there is no reason for the fluke to arrange the errors as well.

, and E1→ 0 and similarly E2→ 0, even if there is a notably large R. In other words, if a large R is obtained as a statistical fluke, there is no reason a priori for a large E to arise, since there is no reason for the fluke to arrange the errors as well.However, if the signal in PR(p) is real and not coincidental, we expect a large PE(p) as well. This is because we expect the ages with larger errors to exhibit less clustering around the phase of maximum R, since it is easier for poorly dated meteorites to stray off the preferred phase. We therefore expect E to be in opposite direction of R. This implies that having a large E can be used as an independent indicator to show that the data has a real correlation. Moreover, for consistency, we can also calculate the angle cosine μ between the vectors, which should be ~ -1 if the signal is real:

|

Results of the Rayleigh analysis

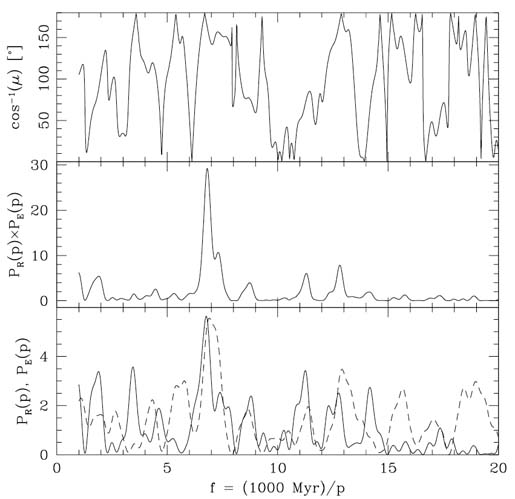

I now proceed to perform the Rayleigh analysis using the stricter grouping suggested by Jahnke.The Rayleigh power spectrum PR(p), the independent error spectrum PE(p) and the angle between the R and E vectors were calculated as a function of frequency. The results are depicted in the figure.

[collapse title="Power Spectra"]

The results of the appendix also explain why the analysis performed by Jahnke could not yield any signal. Degrading the 6% statistical significance any further by letting poorly dated meteorites deteriorate the S/N, or by using the Kuiper statistic with its lower statistical efficiency (relative to the Rayleigh analysis, for this type of a signal), is evidently more than sufficient to remove any trace of the 145 Myr periodicity.

But this is not all. Besides PR there is also the independent PE signal. The Monte Carlo simulation yields a probability of 3.7% to obtain the signal in the error distribution. The combined probability obtained in the Monte Carlo simulation is 0.2% (since it is roughly the product for the probabilities of both spectra, it indicates that the signals are indeed independent).

Last, for consistency, we see that the angle between R and E is around 180°. That is, the vectors point in opposite directions as predicted. Clearly, the 145 Myr signal is present in the data at high statistical significance.

We can refine the test and ask ourselves, what is the probability that a meteoritic exposure age signal will be present in the data which agrees with the periodicity found in ice-age epochs on Earth, that is, with a period of 145 ± 7 Myr. The probabilities for this to occur are 1.0%, 0.6% and 0.06% respectively. Clearly, it would be shear coincidence for the meteoritic exposure ages to happen to (a) exhibit a periodicity in their their exposure ages (b) independently agree in their error distribution (c) agree in the phase between the errors and clustering, (d) and happens to agree with the climatic periodicity! Note also that this doesn't even take into account the fact that the phase of the 145 Myr period in the meteoritic data is in agreement with the phase of the ice-age epochs (which would contribute yet another factor of 5 in the (im)probability estimate).

Summary

I have shown above that the analysis of Jahnke is critically flawed by considering poorly dated meteorites. As I demonstrate in the appendix, this can easily reduce a 1% significant peak to the 10% level. This is the main reason why the 145 Myr peak disappeared from Jahnke's analysis, not the fact that he was more stringent in the clustering he used. As for the two new clustering methods introduced by him, the methods give rise to unacceptable systematic errors and should therefore not be used.In the new method described above, based on the Rayleigh analysis, no re-clustering is assumed, only a reduction of the meteoritic weights. This has the advantage of introducing no systematic error and lacks the ambiguity present when clustering a large group of meteorites spread over a long period.

Moreover, the Rayleigh analysis appears to be a better method for the type of signals we are testing for (see also Leahy et al., ApJ, 272:256, 1983, who compares the Rayleigh analysis to epoch folding and reach similar conclusions). Once we use a method which is expected to yield a better S/N, we recover the 145 Myr periodicity, with a high confidence level, even if we keep ourselves to a much more stringent data set, as restricted by Jahnke.

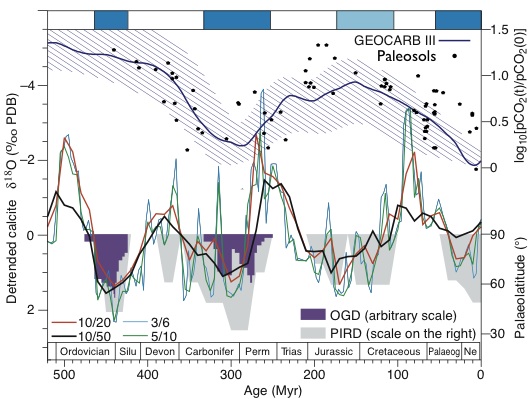

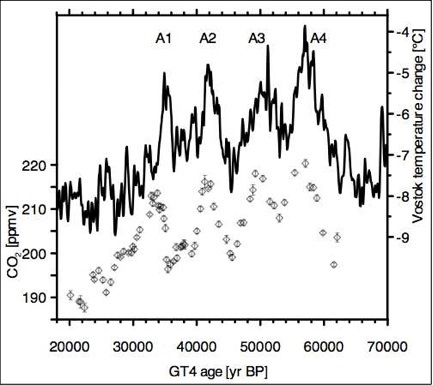

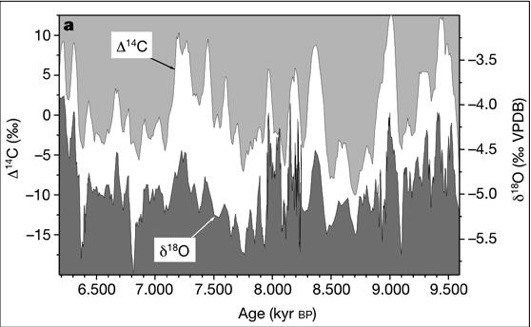

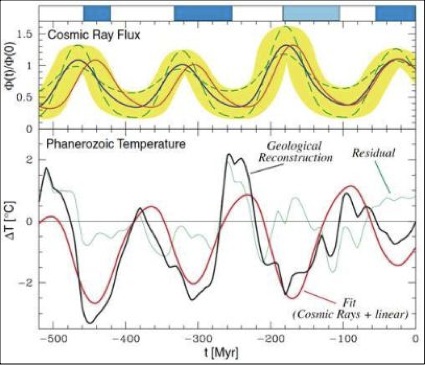

It is also worthwhile noting that the periodicity found in the meteoritic exposure ages is not unrelated to other signals, which have consistently the same period and phase.

In particular, the cosmic ray flux is predicted, using purely astronomical data, to vary at roughly the same period (135±25 Myr) and phase. Thus, the fact that a signal is present should not be surprising at all. On the contrary, it would have been a great surprise if no signal would have been observed in the meteorites!

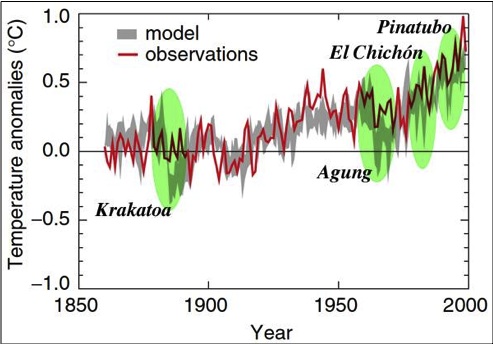

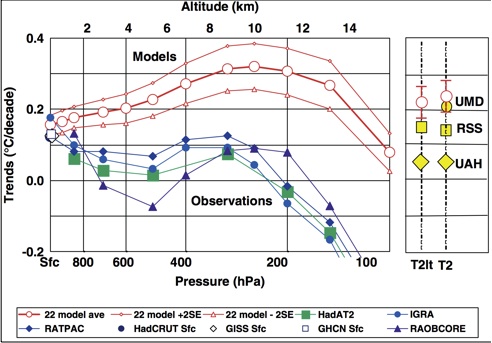

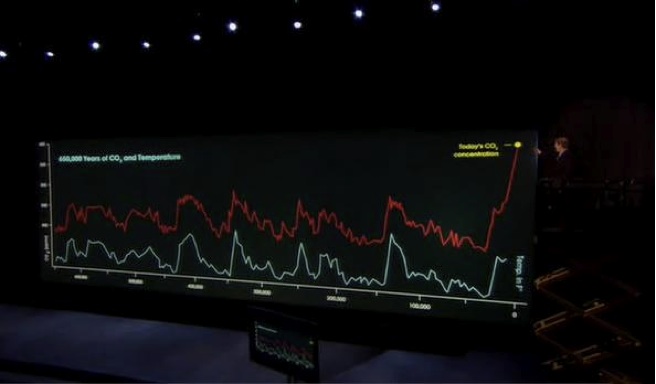

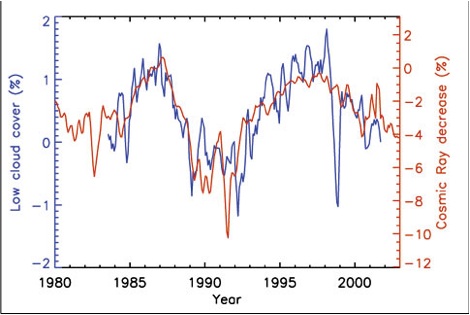

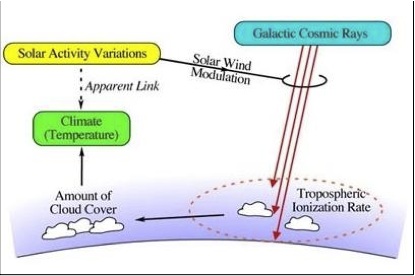

Since cosmic rays are suspected as being a climate driver (with the first suggestion by Ney, already in 1959!), it is also no surprise that various sedimentational and independently geochemical reconstructions of the terrestrial climate reveal climate variations with the same period and phase as that seen in the exposure ages of meteorites.

[collapsed title="Appendix: Rayleigh Analysis and Kuiper statistics comparison"]

Comparison between the Rayleigh Analysis and Kuiper statistics for finding a periodic signal

Above, I presented a statistical analysis based on the Rayleigh periodogram. The question arises, why is this analysis better than the Kuiper analysis (which is the modified K-S analysis used by Jahnke). The answer is that in general, it does not have to be the case, but it certainly is for the type of signals we are looking for. In particular, the K-S and Kuiper analyses, are better suited for finding deviations from a non-uniform distribution and from non-periodic distribution.The aim of the statistical analyses introduced, either by Jahnke or by myself, is to estimate the probability with which one can rule out the null hypothesis, that the meteorites are distributed homogeneously.

To see the type of statistical significances the methods can yield, we will look at a simple, yet realistic distribution for the ages, and calculate the significances with which the above null hypothesis can be ruled out.

Lets assume that the signal in the data has a probability distribution function of

![$$

P(t)= {1\over \Delta T} \left[1+\alpha \sin \left(2 \pi t \over p_0\right)\right]

$$](http://www.sciencebits.com//files/tex/50801e7b6769bbaf1fe79c253a760b38a3ece1fb.png) |

If we have N measurements with the above distribution, the normalized Rayleigh amplitude will be:

|

. Thus,

. Thus,

![$$

{\bf R}(p) = {\sqrt{N} \over \Delta T } \int \left[1+\alpha \sin \left(2 \pi t \over p_0\right)\right] \left( \cos \left( 2 \pi t_i \over p\right) {{\hat{\bf e}}_1} + \sin \left( 2 \pi t_i \over p \right) {{\hat{\bf e}}_2} \right)

$$](http://www.sciencebits.com//files/tex/0b6ae18e1f000ad7b1229fdbe139e18805f7c1c9.png) |

and

and  , while

, while  . Hence, we find:

. Hence, we find:

|

|

. The probability that R2 will be larger than a value a is given by

. The probability that R2 will be larger than a value a is given by

|

|

In the case of the Kuiper statistics, we first need to calculate the largest displacement between the cumulative distribution and the homogeneous distribution.

Given the above probability distribution function, folded between 0 and p0, the cumulative function normalized to p0=1 is:

![$$

{\mathrm{Prob}} (t>\tau) = \tau - \left( {\alpha \over 2 } \left[ \cos\left( {2 \pi \tau} + \phi_0 \right) - \cos\left( \phi_0 \right) \right] \right)

$$](http://www.sciencebits.com//files/tex/e5123de9532251b343986764ce40132ca5b90eda.png) |

|

and gives:

and gives:

|

![$$

{\mathrm{Prob}}(>V) = Q_{KP} \left( \left[ \sqrt{N} + 0.155 +0.24/\sqrt{N} \right]V\right)

$$](http://www.sciencebits.com//files/tex/0e0bc01d98f013aaa834284b5468ccb04c58d102.png) |

|

Thus, for this distribution function, the Rayleigh analysis requires half as many points to reach the same statistical significance. Conversely, for the same number of points, the Kuiper statistic will result with a less significant result for the type of probability distribution function we are interested in. For example, if α≅0.7, 40 points are required to reach a 1% significance with the Rayleigh statistic. The same 40 points, would result with a 28% significance (which is insignificant!) with the Kuiper statistic.

[/collapse] [collapsed title="Appendix B: Degradation by poorly dated meteorites"] The above results also explains why adding noisy points heavily degrades the statistical significance. Suppose we double the number of points, by adding noisy points that have no correlation with the signal. In such a case, we double N, but decrease α by a factor of 2. Since the significance is a function of Nα2, the significance will deteriorate. For example, if we have about 40 points with α≅0.7, the significance will deteriorate from 1% to 10%. It is therefore important not to include in the analysis points with poor error determinations.

[/collapse]

More online Material

- The Milky Way Spiral Arms, Ice-Age Epochs and the Cosmic Ray Connection

- Cosmic Rays and Climate - A general description of the topic the link

- Climate Debate - More on related debates.

- Anthropogenic or Solar? - More on the attribution of global warming.

is the albedo.

is the albedo.

![\[ T = \left((1-\alpha) F_0 / 4 \over \epsilon \sigma\right)^{1/4} = \left(S \over \epsilon \sigma\right)^{1/4}, \]](http://www.sciencebits.com//files/tex/edc53ba1c856c43419a788038e6f2459e479683c.png)

nor

nor

.

.

) and how much the emissivity changes as a function of temperature.

) and how much the emissivity changes as a function of temperature.  is negative. This implies that

is negative. This implies that  decreases and the sensitivity

decreases and the sensitivity  increases.

increases.

is the effective change to the energy budget through feedback i, following a change in temperature

is the effective change to the energy budget through feedback i, following a change in temperature  .

.

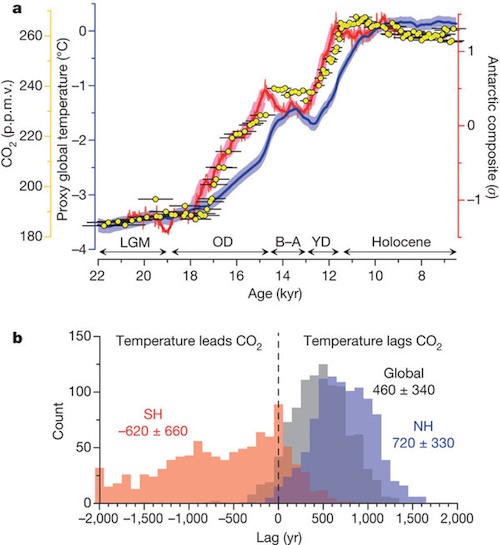

, from some climate driver other than CO2 variations (e.g, from the Milankovich cycles). If the sensitivity is given by

, from some climate driver other than CO2 variations (e.g, from the Milankovich cycles). If the sensitivity is given by  , assuming for a moment that CO2 does not play a role.

, assuming for a moment that CO2 does not play a role.  causes a change in the CO2. Per unit temperature change, it is:

causes a change in the CO2. Per unit temperature change, it is:

change, it is

change, it is

, which is the temperature response to changes in the amount of CO2. Thus, the total temperature change is:

, which is the temperature response to changes in the amount of CO2. Thus, the total temperature change is:

, the positive CO2 feedback will make the system unstable. Any small change in the radiative balance would cause a CO2 variation that will make the response diverge. Since we know that the climate system is stable (we don't get runaway conditions like on Venus, nor did we ever have them), the sensitivity is less than the critical sensitivity we obtained.

, the positive CO2 feedback will make the system unstable. Any small change in the radiative balance would cause a CO2 variation that will make the response diverge. Since we know that the climate system is stable (we don't get runaway conditions like on Venus, nor did we ever have them), the sensitivity is less than the critical sensitivity we obtained.

.jpg)